Deploying AI Cost-Effectively: A Practical Framework

It's 2025. AI is no longer a futuristic concept but a practical necessity. Many businesses are moving beyond initial AI experiments, facing the challenge: how to deploy high-performing AI solutions without burning through budgets.

Here I share my recent AI deployment – a sentiment analysis and text rephrasing system designed to elevate user experience within a company previously reliant on rudimentary, rule-based sentiment tools.

I replaced a basic rule-based sentiment tool with a more nuanced AI solution, dropping annual costs from a projected $700,000+ to under $50,000. The shift preserved performance, strengthened data security, and created a user experience that feels both personalized and scalable.

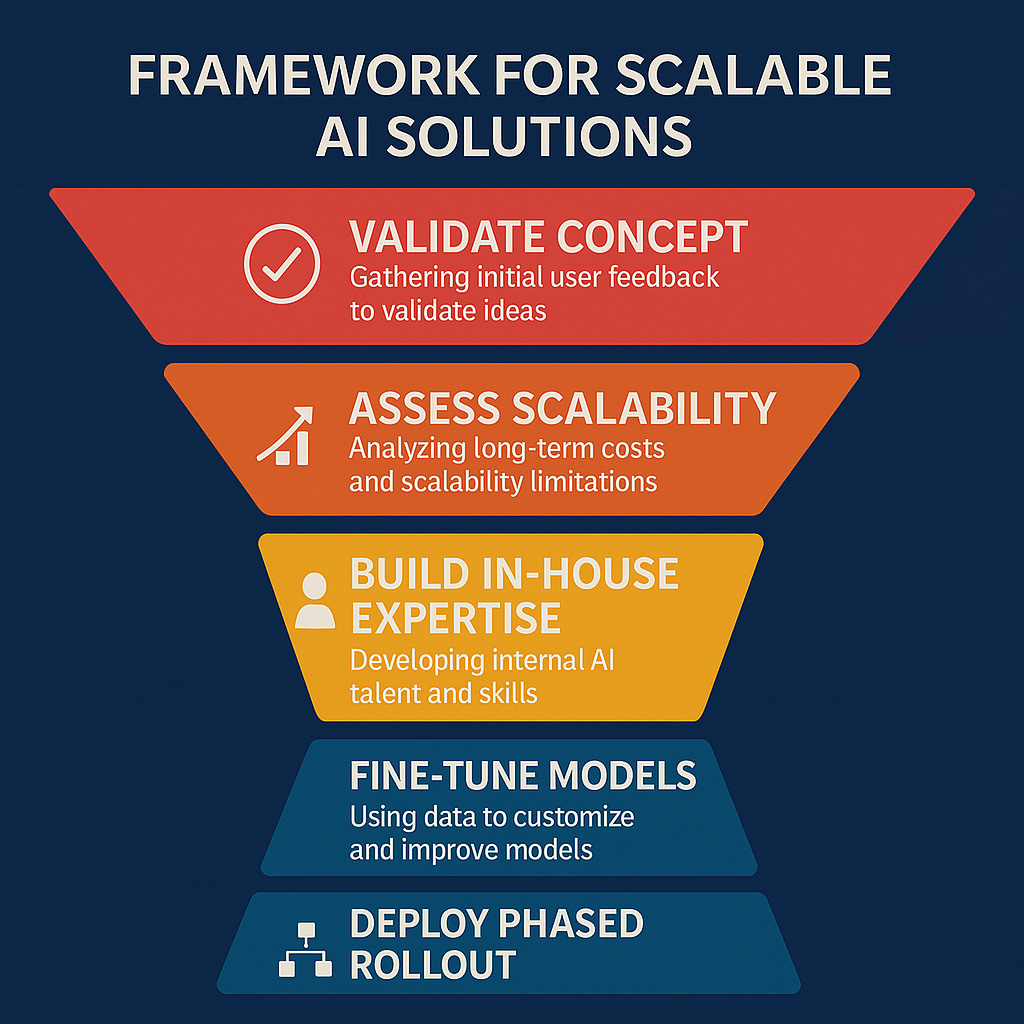

TL;DR Framework: Scaling AI Solution in a Cost-Effective Way

API-Driven Prototyping: Utilize third-party APIs for rapid concept validation and initial user feedback.

Scalability Assessment & Cost Analysis: Analyze long-term API costs at scale. Recognize the inherent limitations and potential financial unsustainability of API-dependent solutions.

In-House Expertise & Open-Source Adoption: Invest in building internal AI talent and embrace open-source models. This shift is crucial for long-term cost control, customization, and strategic advantage.

Data-Driven Fine-Tuning: Leverage data collected during prototyping to create custom datasets for fine-tuning open-source models.

Phased Deployment: Implement a phased rollout (beta programs, parallel testing) with rigorous validation and quality assurance at each stage.

Continuous User Feedback: Embed mechanisms for ongoing user feedback to drive improvements.

Keep reading for step-by-step details.

The Starting Point: Rudimentary Systems and Rising Expectations

We began with a basic, rule-based sentiment system—functional, but too limited for modern needs. Users wanted not just sentiment detection, but also AI-driven text rephrasing aligned with company guidelines. Our objective: build an advanced AI solution for nuanced sentiment analysis and intelligent rewriting, all while integrating with legacy systems.

Phase 1: API Prototyping – Quick Validation

The best place to start AI exploration is with available third-party APIs. Their appeal is immediate: rapid prototyping and validation of core concepts. This approach let us quickly show internal stakeholders the impact and promise of these AI features. Low starting costs of the “pay-as-you-go” model, costing around $3-15 per million tokens, initially seem like a huge benefit for this experimental phase. It allowed us to quickly answer crucial questions: Is advanced sentiment detection valuable? Can AI-powered rephrasing improve user communication?

The answer to both was a resounding yes.

The API Bottleneck: Scalability vs. Cost

Most quickly realize that moving from prototyping to planning for broader deployment brings up the limitations of APIs.

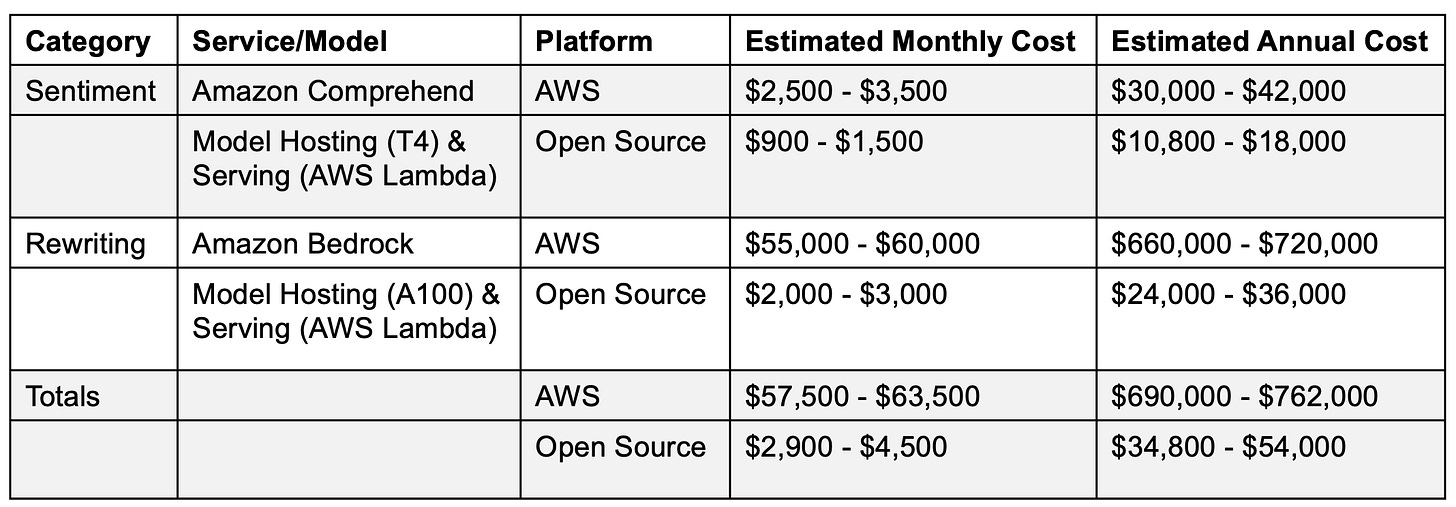

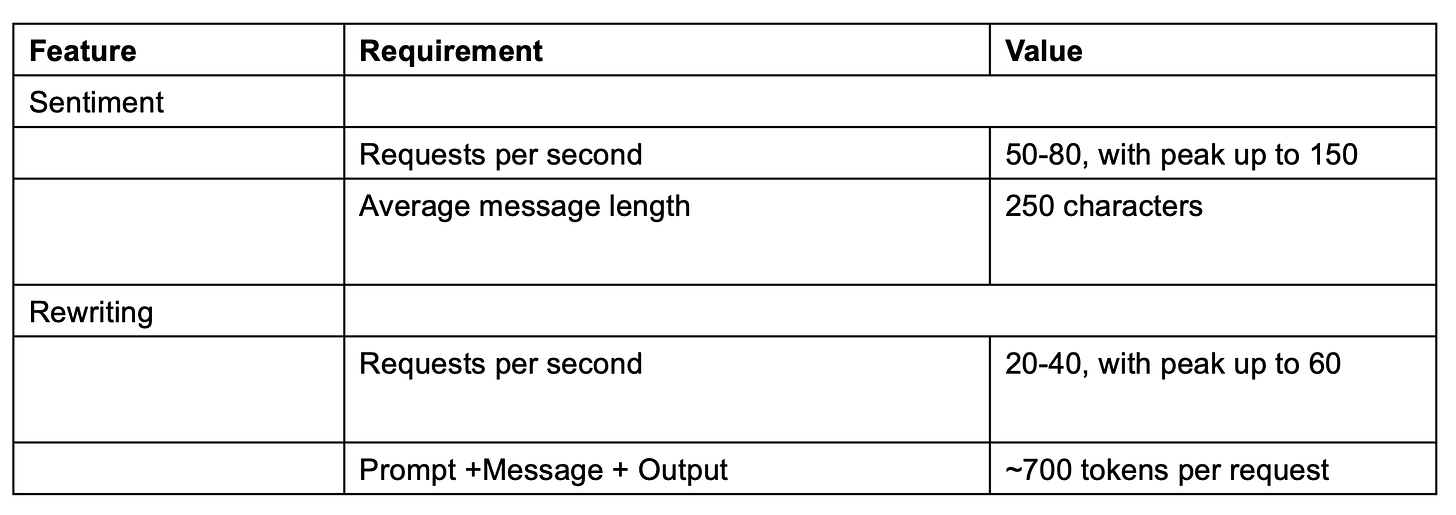

Initial costs were manageable, but projections for scaling became alarming. For this case, estimates revealed that API costs for sentiment and rephrasing could exceed $700,000 annually – a prohibitive figure. This is mainly due to high throughput requirements needing dedicated server capacity and cutting-edge LLMs being too expensive for such tasks. Plus, the lack of customization options presented significant challenges. User feedback emphasized the need for personalized models and higher request throughput, functionalities difficult and costly to achieve with standard APIs.

While APIs were excellent for initial exploration, they were unsustainable for long-term, scalable solutions. So, what’s next?

Phase 2: Open-Source Advantage

Recognizing these limitations forced us to search for alternatives - building in-house AI solutions leveraging open-source models. The objective was to create a high-performance and economically viable solution at scale.

We turned to Hugging Face – a go-to hub for selecting the right model. After a quick review, we found that BERT-based models (RoBERTa, DistilBERT, ModernBERT) excel in sentiment analysis. Rigorous benchmarking, including cost-optimized solutions like AWS Inferentia, showed they offer both high performance and strong affordability.

The main hurdle with open-source models is fine-tuning them for specific needs—a step that requires curated data. Luckily, our API prototyping phase gave us exactly that. This fine-tuning was transformative, creating specialized, context-aware AI that outperforms generic APIs while slashing costs.

Phase 3: Ensuring Quality and Performance

When going to production, it is important to phase it carefully.

Rolling out the new in-house solution to a representative subset of users is the right thing to do. This controlled environment allows the assessment of real-world performance and iteration based on live usage patterns. Testing for performance is also crucial. As there was an existing legacy system that already served all users, we mirrored live traffic to our new model in “shadow mode.” This parallel approach, invisible to users, provided authentic performance data under real-world conditions.

Quality assurance was paramount. An internal review team evaluated the new model’s accuracy, quantifying improvements over the old rule-based system. To enhance efficiency, I also incorporated an LLM as a judge to augment human review, while maintaining critical oversight for long-term accuracy and stability.

Positive validation at each stage paved the way for wider beta releases and the introduction of enhanced features like AI-powered rephrasing, proactively assisting users in aligning communications with company standards.

Continuous Improvement: User Feedback as a Compass

Throughout beta and beyond, user feedback was our compass. Direct feedback mechanisms within the user interface, asking about model accuracy and helpfulness, provide continuous streams of insights and data for model improvements. Engaged users who provide extra comments created opportunities for a deeper understanding of user needs and ways to improve the experience.

The Cost Transformation

The financial impact of this transition was dramatic. Projected annual API costs of $700,000+ were transformed into in-house operational costs under $50,000 annually. This wasn’t just cost-cutting; it was a strategic shift.

If your AI pilot never evolves beyond a costly experiment, you’re missing out on genuine ROI. It’s easier—and cheaper—to integrate AI into your business than you might think, provided you have the right plan.

Subscribe to my newsletter for more real-world, cost-saving AI strategies.